Professional camcorders - whether PTZ, box cameras, document cameras or high-end webcams - follow hand in hand with developments in the technology behind image sensors, the devices that convert incident light collected by the lens into an electrical signal that is then processed by downstream electronics. Sensors represent the key component on which image quality depends, which, contrary to what one might think, is not linked to the total number of Mpixels (pixel count) 'spread' over a given surface area that identifies the sensor sizebut to the size of the pixel itself, what is called the pixel pitch and which in most cases also equals the distance between the centre of a pixel and the adjacent pixel, assuming there is no gap between one and the other.

Larger pixels are able to capture more light and are therefore more efficient, i.e. they generate more current, as opposed to smaller ones. This is why, for the same number of total pixels, a larger sensor performs better than a smaller one, especially in terms of noise, as we will see in a moment.

The most widely used sensors in mid- to high-end digital camcorders are the CMOS type (acronym for Complementary Metal-Oxide Semiconductor), which have almost completely replaced the charge-coupled CCD-type ones most commonly found in CCTV surveillance cameras.

The sensors commonly used in camcorders have the pixels arranged in the form of a regular matrix (rows and columns) with diagonal diameters varying from 1/2.3" (corresponding to 6.17 x 4.55 mm) to 1" (13.2 x 8.8 mm) and resolutions ranging from 2 to 12 Mpixel. The world's largest manufacturers of image sensors for these devices are Sony, Omnivision, Samsung, Canon and Toshiba.

The problem of noise

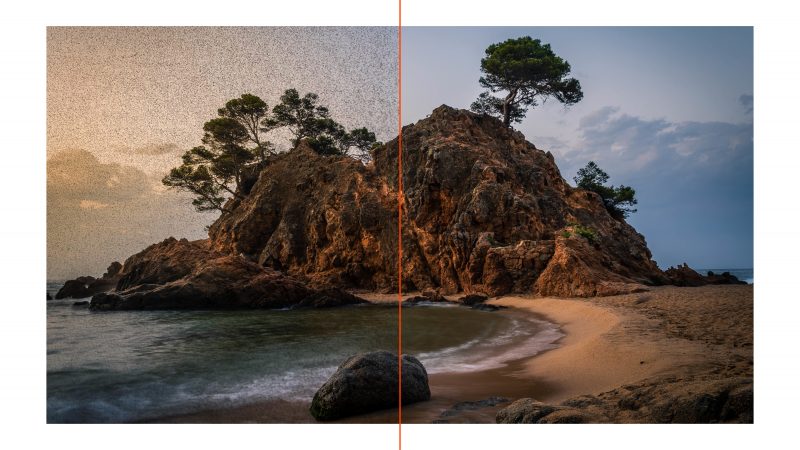

We mentioned earlier the problem of noise, which plagues all digital image capture systems. This is a defect that, in video filming, can become very serious in the case of poorly lit scenes: it is caused by the fact that, even when the sensor is in the dark, it still generates a current, albeit a weak one, which is amplified by the following circuits and produces, precisely, noise. A phenomenon that is also well known in the photographic field, it is even more annoying with video as the 'grain' due to the noise itself changes from one frame to the next, creating an annoying effect that prevents details from being seen clearly, decreases the perceived resolution (the noise suppression circuits tend to 'plane' the details themselves) and changes the fidelity of colours. A kind of graininess that we have all experienced and which becomes unacceptable on poor quality video cameras, making them impossible to use in certain circumstances.

Thanks to the power of image processors, increasingly sophisticated digital algorithms are able to detect the noise produced in video footage in real time and to limit it in order to give the transmitted/recorded image a better quality. In general, therefore, work is being done on improving sensor technology on the one hand and image processing algorithms on the other. Unfortunately, at the moment, it is not yet possible to reconstruct missing pixels, however, sophisticated interpolation techniques have also been introduced that reconstruct non-existent pixels based on adjacent pixels in order to increase perceived resolution and detail. In parallel, powerful facial recognition algorithms have been developed and are able to work even with poorly defined images, which is indispensable in all uses of cameras where a speaker is present, for example during a lecture or conference.

Human Detection and Artificial Intelligence (AI)

Human detection (Human Detection) and facial recognition in video cameras exploit technology that can identify the silhouette or face of a person through powerful algorithms and calculation functions. Facial recognition (Face Detection), specifically, occurs through biometric software that maps the facial characteristics (including real poses and expressions) of an individual in a mathematical way and stores the data as a 'printout' of the face. These algorithms, supported by the power of DSPs, compare in real time the acquired image and an internal database, which contains all the biometric data, in order to detect the face and thus ensure perfect focus, exposure and tracking of the individual moving in a more or less wide space.

In this context, the term used of artificial 'intelligence' is nothing more than the extension of face recognition to be able to detect not only one or more human faces, but also the characteristics of the scene itself that is being framed, such as the contrast and brightness of the subject with respect to the background, possible colour dominants, recognition of particular elements or shots, video noise reduction, auto-tracking in presenter or zone mode, etc.

An example? The automatic framing of the field of view (FOV) used on some AVer models. Automatic framing makes holding a meeting easier, as instead of manually varying the frame to suit all the participants involved by acting on the angular movement of the lens, you simply press a button and let artificial intelligence set the correct FOV (angle of field).

Increasingly advanced subject tracking

For some years now, the most sophisticated PTZ cameras have had subject tracking systems that act in a two-dimensional manner, both when the subject moves from left to right (tracking on stage) and when it approaches or moves away from the camera.

On-stage tracking mode allows a presenter moving across the stage area to focus and follow, for example to show content on multiple displays or a large projection screen. In this case, focusing is less critical as movement occurs in a plane perpendicular to the camera lens. A variant is the zone mode, which precisely creates multiple preset zones to allow a presenter to move to a specific area, within which he is captured in an (almost) fixed manner rather than in motion. Content is then sent to several separate displays.

Then there is the wide tracking mode, which gives the person being framed the flexibility to leave the stage and interact with the audience, approaching or moving away from the point of capture in a random manner with the certainty that the camera will remain 'locked on' to its target, even if other people or objects enter the trajectory. In this case, the MAF (Focusing) is more critical as the subject's movement, at random, may be towards the shooting point and then away from it. A fast and reliable autofocus system is therefore indispensable, although relatively small sensors such as those used in these cameras also forgive some error or 'uncertainty' given the rather large depth of field.

The various tracking methods now listed can also be combined. Among other things, using a special web user interface, some PTZ models allow you to simply click on a target subject to quickly adjust the camera angle and initiate precise tracking, thus guaranteeing redirection within moments.